Chain of Continous thought and coincidence

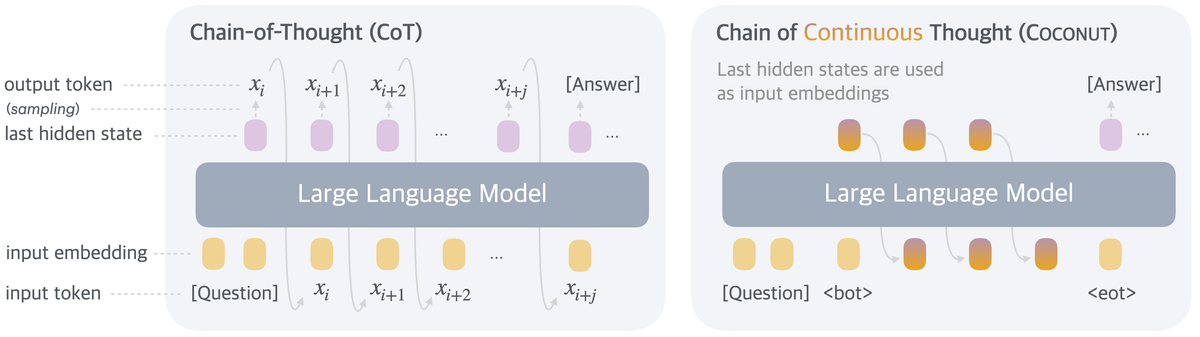

We just uploaded a new video on Kreasof AI YT channel. This time we explained the concept of "Chain of Continuous Thought" by Meta AI. This method enables LLM to consume their own hidden states as continuous tokens rather than discrete tokens. This method offers a more efficient and flexible way to teach LLM to do reasoning because the "language bottleneck" is already bypassed.

And another fun fact, 5 months ago Kreasof AI comes with a very similar idea called an "Internal latent loop" where we feed the input gate of the model with the last hidden states, what a coincidence 🔥

Link for the video explanation:

Link for our project:

GitHub - kreasof-ai/Homunculus-Project: Long term project about a custom AI architecture. Consist of cutting-edge technique in machine learning such as Flash-Attention, Group-Query-Attention, ZeRO-Infinity, BitNet, etc.

Long term project about a custom AI architecture. Consist of cutting-edge technique in machine learning such as Flash-Attention, Group-Query-Attention, ZeRO-Infinity, BitNet, etc. - kreasof-ai/Homu…